The various parts of an Ather — battery, motor, dashboard, light, horn, key, speakers — — are connected and controlled by software. We have ~50 sensors that generate data at high frequencies. This data, besides being used for the running of the vehicle, is transmitted to the cloud (Read this to know more about Software at Ather). We use this time series data to provide shorter service-turnaround time, diagnose issues faster, offer features like near real-time ride statistics and predictive maintenance. This data also allows us to iterate on our products and make them better, faster.

These use-cases don’t seem any different to any IoT company today, right?

Well, that’s where Ather’s software stack is different. This data also provides us with a unique opportunity to change the face of mobility as it is today.

Think ‘safety features’. Think ‘detecting and automating the simplest of rider actions to enhance overall ride experience’. Think ‘ADAS (Advanced Driver Assistance Systems) for an electric two-wheeler’.

Our Edge AI Module — The Intelligence Engine

Our automatic indicator-off feature detects a turn in the vehicle and triggers turn-off, all of this within milliseconds. The feature, unlike other two-wheelers or cars, is not based on the turning of the steering or handle bar and works off the patterns in changing angles of the IMU. We have developed a feature that identifies anomalies in movement to signal potential theft/tow. We also provide accurate personalized estimates of distance that a rider can cover using the charge left in the vehicle, based on their riding patterns.

While we are still scratching the surface, our data science team has built smart features, with IMU data streamed real-time and stateful processing in the core. Powering these are Kalman filters, Recursive Least Squares Estimation and other Probabilistic models (Read more about our Algorithmic Architecture here). They help us estimate the state of charge (SoC) of the battery, detect vehicle maneuvers, estimate changes in vehicle orientation and perform a lot more concurrent activities to provide necessary data to the abstracted algorithms.

Most of the ride critical features — key on, accelerate based on throttle, brake, etc. — require the algorithms to churn out data at high frequency with low latency, resulting in intensive computation, and high energy-consumption. As we work towards adding more features, the dashboard could get resource-constrained, getting limited with computation-bandwidth and memory. Hence, The tension between resource-hungry applications and resource-constrained devices poses a significant challenge.

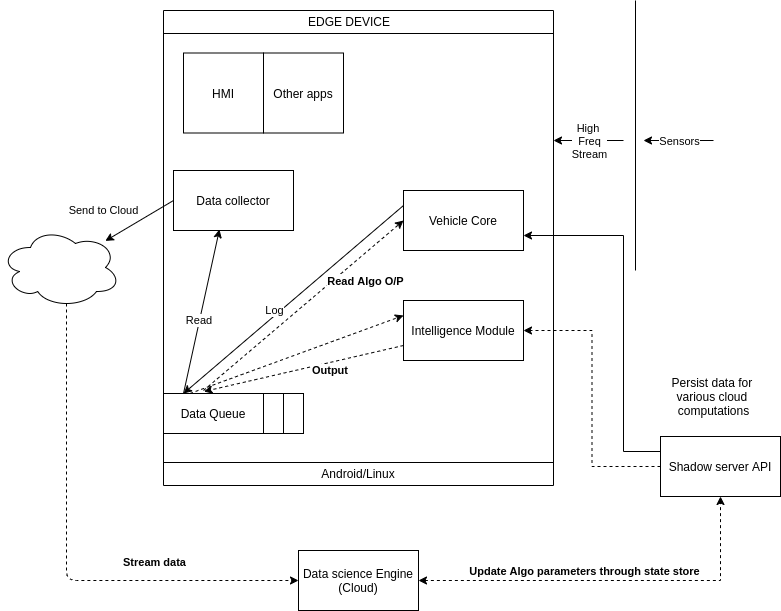

While centralized computing at the edge does give us an upper hand with latencies, we are moving towards a distributed computing architecture where the feature algorithms that have low latency requirements are fulfilled locally, and more advanced and moderate latency requirements are distributed between the cloud counterpart of the intelligence engine and the edge. The application at the edge is capable of triggering event-based computation on the cloud instance and communicating back with the core for any downstream decision-making.

The vehicle’s core software application does the necessary ride critical operations but the intelligence module runs independently, parsing and processing all of the messages transmitted by the sensors in real time. This enables us to efficiently separate the functioning of algorithms from the actual base functioning of the scooter, making the architecture robust. This way, the core application looks only for the right information in the models from the message queue (MQ), if it’s needed

The intelligence module is capable of cloud edge-computing. For medium-latency features, the edge counterpart could identify a pattern-based trigger and communicate to the cloud counterpart for complex computations and send the result back to the edge module for further decision-making.

Rapid development, deployment and feature improvements

Our intelligence module has a robust design and with a few tweaks, the application can run on the cloud on demand, similar to any streaming process. It was specifically designed to allow testing and validation of different algorithms on the cloud, before they are deployed to the edge. This way, algorithm improvements can happen quickly and once we are confident, they can be pushed to production on the edge for the actual use-case.

The intelligence module is also designed to be configurable on the fly. The models and its parameters can be tweaked/configured across various thresholds as we learn and improve their performance. We are also able to control the algorithms in real time. At any time, if an algorithm has to be turned off, we can do it within a few seconds while maintaining consistency in user experience.

The performance of all of these algorithms are logged and measured for further improvements and new features. The data from these algorithms along with sufficient training data is parsed to the cloud where our data scientists can iterate the algorithms and re-train the model, test and deploy within the cloud, and then release it to production on the edge.

Ather stack is enabled with over-the-air (OTA) updates. Performing OTA on a vehicle full of embedded systems is tricky and gets even trickier when you have to update multiple algorithms for different users and use-cases. With partial OTA and the ability to inject only the necessary algorithms into various clusters of Ather bikes, we can employ lightweight OTAs to rapidly improve and introduce new features. This also ensures that resources on the vehicle’s compute core are optimally utilized without hampering the ride experience.

Smarter features and more

Cloud-edge computing allows us to innovate beyond the traditional use-cases, as personalization and safety are key to the future of mobility. With features like Real-time Fall/Accident detection, Theft/Tow detection, and advanced Diagnostics and Alerting, safety becomes a key part of the Ather ownership experience. Our data science team is currently in the early stages of building unique capabilities and use-cases. We are exploring the possibilities of being able to detect road terrains (bumps, potholes, slopes) and more, tailored to provide a connected experience for every rider on each ride.

Having the edge module considerably reduces the communication costs derived from the cloud model. In other words, the Intelligence Engine takes the data and its processing to the closest point of interaction with the user/server. This eliminates the need for transmitting millions of bytes and storing them in the cloud, as well as bandwidth and latency limitations that reduce data-transmission capacity. We now have the flexibility of only transmitting necessary events on demand, to train our algorithms.

Leave a Reply